Is Shadow AI Quietly Reshaping Your Workplace Security Posture?

Key Takeaways

- Shadow AI usage has risen drastically in the workplace, as evidenced in Ivanti’s 2025 Technology at Work Report.

- Open dialogue and clear policies promote responsible AI use and minimize risks associated with shadow AI and free AI tools.

- Gradual, controlled implementation — in consultation with employees to understand their AI usage needs — allows security leaders to balance business goals and security requirements.

AI tools have seen a meteoric rise in the workplace. What was once the domain of highly specialized tech roles is now commonplace: Ivanti’s 2025 Technology at Work Report found that 42% of office workers say they’re using gen AI tools, like ChatGPT, at work — up 16 points from the previous year.

The catch? These productivity gains happen under the table. Among those who reported using gen AI tools, 46% say that some (or all) of the tools they use are not employer-provided. And, one in three workers keep AI productivity tools a secret from their employers.

Gen AI tools can be a productivity multiplier. But they’re also a risk to data security — particularly when they’re used without employer oversight.

What is shadow AI?

Unsanctioned use of AI is just another flavor of shadow IT (i.e. the use of technology without IT approval).

The risks that shadow AI introduces are similar to other shadow IT risks, but with an additional layer of concern: the sheer amount of proprietary data generative AI requires to be effective. Free generative AI tools (and some paid tools as well) may use an organization’s data or employee searches to train their model, amplifying the risk of data leaks and noncompliance.

The recent revelation that shared ChatGPT conversations were crawlable by search engines (although OpenAI swiftly changed course) should be a wake-up call that, without proper controls, third parties can use your data in ways you object to. Some free tools, ChatGPT included, can be configured to meet security policies, but that’s simply not possible when employees use them covertly.

Free tools like ChatGPT aren’t the only shadow AI risk. An unexpected source is actually existing software. With the rush to add AI features, tools that might previously have been IT-approved may now pose new risks, and if infosec teams don’t know about and evaluate these new features, they effectively circumvent third-party risk management processes.

Why a risk-first approach to AI is crucial

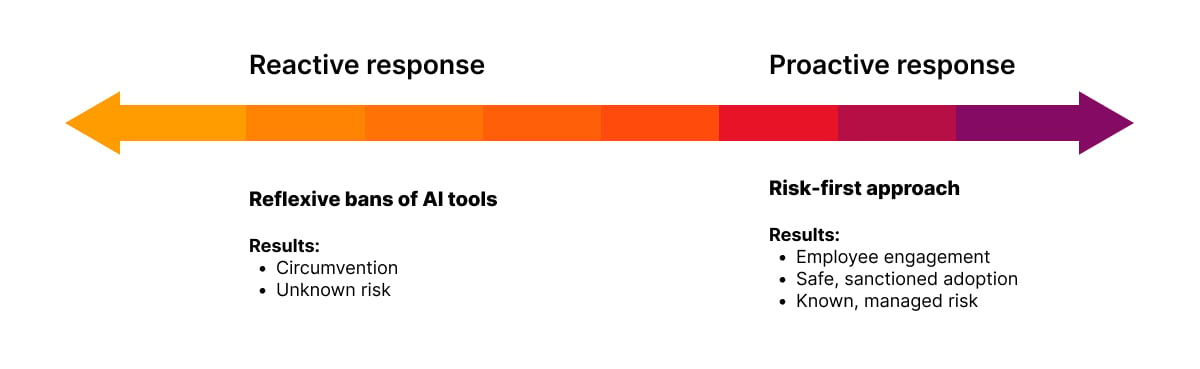

Whether for gen AI or other tools, shadow IT is the result of not having a defined and reasonable way to test tools or get work done. Given that AI isn’t going away, companies need to approach adoption proactively, because banning tools doesn’t mean employees won’t try to use them in an effort to boost their productivity and make their jobs easier.

I spend the bulk of my time assessing risk, including the risks AI tools pose. Often, we have to assess risk as it relates to an opportunity to improve the business — in this case, employee productivity gains and second-order impacts (like employee satisfaction or having time to work on more strategic projects).

In short, we need to ask: Is there a way to introduce the tools employees are asking for and reap the benefits they offer while keeping the risk to an acceptable level?

This is where a risk-first approach enters the picture. A risk-first approach to AI adoption focuses on the data that needs to go into the AI and how the third party handles that data. This approach is similar to vendor risk management, allowing organizations to use established practices and processes, but adjusted for AI-focused questions.

Key question to ask include:

- Will our data be used to train the AI model?

- How long is our data retained?

- What protections exist to reduce the risk of our data being exposed?

- Who has the rights to intellectual property generated using the AI?

Minimizing AI sprawl is a critical piece of this work. As more vendors introduce specialized AI tools — and as you bring on more vendors and grant their AI tools access to your data — your risk increases. This is also true of existing tools that suddenly introduce AI without cost or contract changes, making it difficult to keep an accurate inventory of AI tools.

Adopting an AI governance framework at Ivanti

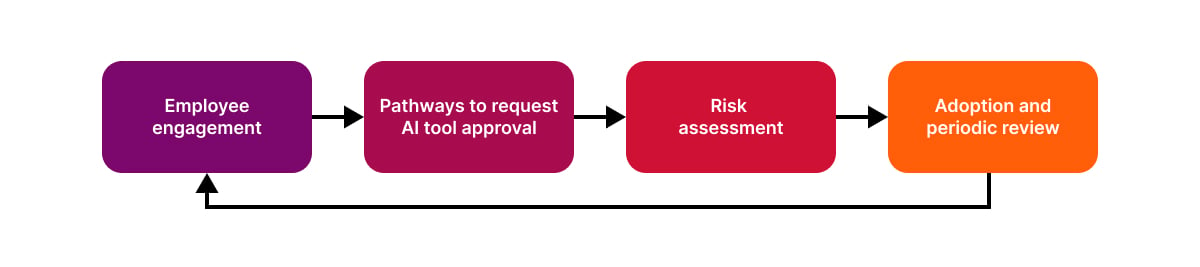

Within Ivanti, we combat shadow AI with a risk-first approach that starts and ends with employee engagement.

Bringing AI use out of the shadows

While we’d never encourage shadow AI, employees that use it have valuable knowledge to share about how to integrate AI into workflows. So instead of banning all AI use, we have to make sure that employees have a clear path to request AI tools to use at work and that there are regular opportunities for open dialogue.

Fostering open dialogue makes employees feel comfortable discussing which tools help them succeed and ultimately means they will use them (or equivalent tools) safely. This provides an opportunity for employees to be active partners in developing appropriate governance — rather than trying to skirt restrictions.

A measured approach to AI implementation and adoption

Once a tool is approved, it is important to ensure proper implementation and that you understand what data you have given it access to. This is particularly important when you consider the data governance and security risk that gen AI tools pose to organizations. When we view AI through the lens of data governance, it can help address many parts of AI risk.

At Ivanti, we take a measured approach: We dedicate a team to run controlled tests of gen AI tools with other teams. We then establish feedback loops, and adoption rolls out gradually to avoid disruption.

Building a feedback loop for AI tools

We have to consistently ask:

- How are Ivanti's employees using AI?

- Do they like it?

- What feedback do they have?

- How can we improve the tool?

This ongoing conversation ensures we're using AI responsibly while meeting employees' productivity needs.

It’s not about jumping on the AI bandwagon. It’s about knowing if it’s worth it — for the business and for the people using it. Shadow AI boosts the productivity of one person. But take that productivity and expand it, and you have a meaningful improvement for the company as a whole.

Proactively combating shadow AI

The running theme here is that even though AI, and particularly shadow AI, poses new and concerning risks, it is here to stay. Employees who use AI under the radar aren’t ill intentioned; if anything, they’re trying to benefit the business, even if they’re going about it the wrong way.

A proactive, risk-first approach to AI adoption recognizes this reality. Instead of reactive bans that only encourage circumvention, we have to engage employees to understand the problems they’re using AI to solve so that we can provide them with safe options that meet our security and data privacy requirements.

FAQs

What is shadow AI?

Shadow AI is a type of shadow IT in which employees use unsanctioned AI tools without the knowledge of their IT or security teams.

How does shadow AI put company data at risk?

Shadow AI can expose proprietary data and introduce security threats because they operate outside official oversight and safeguards.

What is a risk-first approach to AI adoption?

A risk-first approach to AI adoption means evaluating and managing potential risks to data security, privacy and intellectual property before implementing any AI tools.

How can businesses safely adopt AI tools?

Companies should provide clear policies, ongoing education about risks and approved channels for submitting and using AI tools securely.